Listing of efforts

-

Refurbished PC rebuilding

-

Drupal Web Site Build

-

Kismet with GoogleEarth - (wardriving)

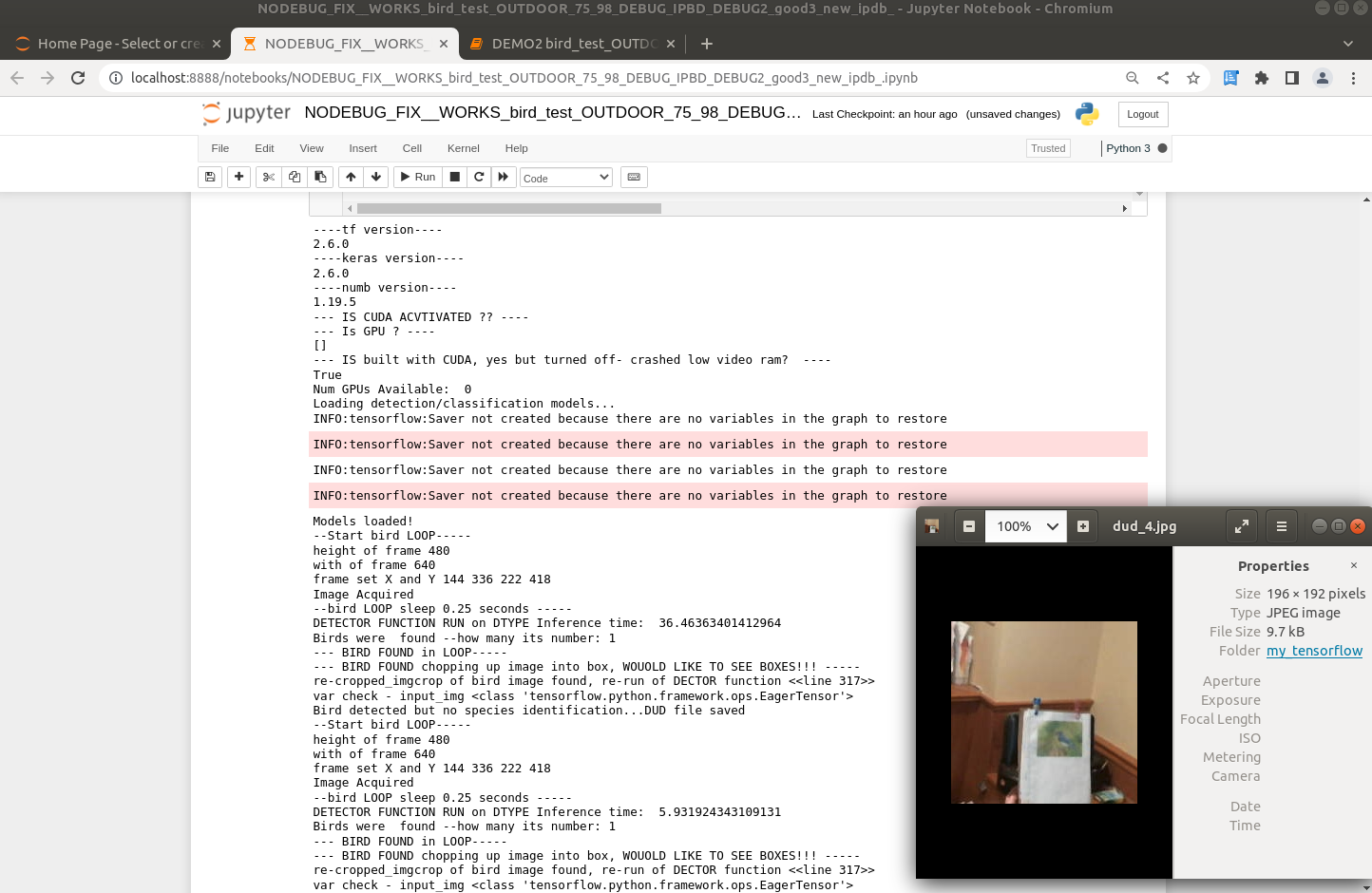

Python & TensorFlow - AI video capture

Note: My key project for Python-TensorFlow adoption is the Outdoor bird cam project (Cmoon author) --> GitHub raw code

It took quite an bit of time and effort to get this running, new hardware with a sharp learning on CUDA ready video cards and need for them on recent TensorFlow versions (so expensive). I just collected roughly entry level items/components to get into the game. I use my past Ubuntu and Dell hardware knowledge to move ahead. This is consistent with my self learning path and listed in other effort/projects on this page.

Currently have Python 3.6 and TensorFlow 1.15 running to support or re-create the project above. It was quite a trip and learning curve in hardware, software and NVIDIA cards. You need a INTEL Sandy Bridge CPU or better to start and then a NVIDIA GTX970 or better for GPU option to run the versions mentioned. (Lots of debug and print statements added , even found the ipdb.set_trace() command. )

SAMPLE RUN OF CODE

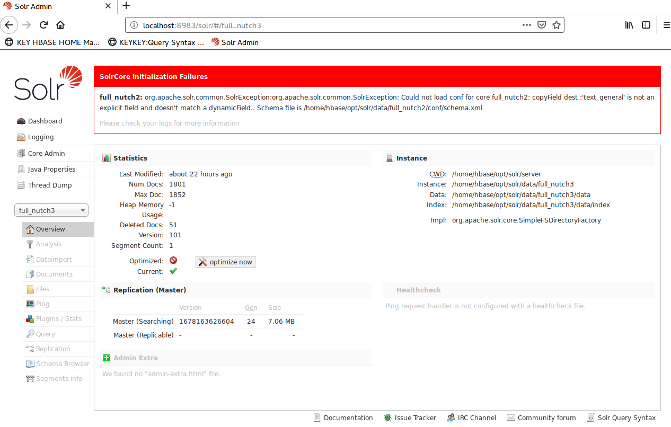

Big Data - Nutch Crawl {w/ Solr results}

While I have Hadoop setup, started to look into web crawling and Nutch surfaced. I found that HBase works off of HDFS which is core to Hadoop, so went forward with that tool. Huge effort, since there were so many config files and network setup details. But since it was part of the Apache set of tools, some of it was close to home. I had to use the single crawl automate script to get is to work, the step by step command approach did not work for me. {commands below, key is to start in correct folder}

Crawl Command:

| cd {**local/runtine** folder} |

| ./bin/crawl {**nutch folder**}/urls/seed.txt xxxyyycr http://localhost:8983/solr/full_nutch3 3 |

I found way too much detail on multi-cluster, my focus was on single node to start. Big lesson to me, stick to the 1.x branch, since they discontinued the 2.x branch currently. {Who would have expected... not latest and greatest here.}

Solr Final Result:

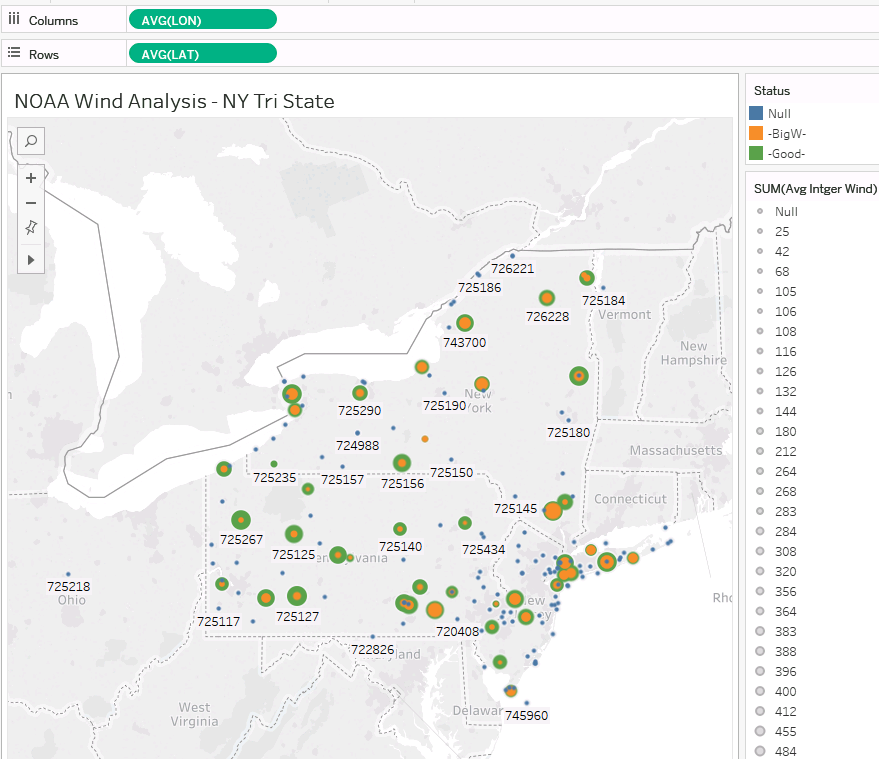

Big Data - HADOOP {w/ Tableau graphics}

After looking to gain big data exposure, I decided to teach myself HADOOP using sources such as: White-Safari book, Yahoo articles and YouTube. The eclipse tool was familiar with me from earlier Java exposure, so that was used for jar coding. Also, I chose to skip all Java-Ant builds options for jar builds and used the eclipse build options. Lastly, I decided to use the Apache open source version of HADOOP with local hardware boxes. Since my network had multiple Ubuntu machines with large Apache exposure, that was a simple choice.m

Further details page on results listed below: Hadoop project details page

Project Focus and Points:

The NOAA example in the White-Safri HADOOP book got me started on other ideas on weather calculations. So, a project was create to learn about the big data tools now being used in the market.

Problem Statement:

LIVING IN THE US MIDDLE EASTERN STATE REGION, FIND BEST TRI-STATE (NJ, PA, NY) LOCATION WITH DEPENDABLE/ CONSISTENT WIND FOR POSSIBLE HOME WIND TURBINE USAGE.

Overview HADOOP Logic:

Mapper:

- Open or download the text based historical data files, stations in the focused tri-state area

- Pull the relevant data fields out of these text files that fit the data study (ie: Station Numb, Date, Max Wind, Min Wind, Mean Wind)

- Calculate the mean Average per-day in knots in integer form is the key sorting field.

- The logic was also to look for a range of 8-18 knots for the mapper to create output file entry,

Reduce:

- Use the Inter mean wind values to group data

- Output a CSV file with key and the other calcauted files as text

Sample - NOAA Wind data extracted 1980

| Int Mean wind | Station Numb | AFB Numb | Date | Status |

Mean Wind |

Max Wind | % Wind Change |

|---|---|---|---|---|---|---|---|

| 11 | 725140 | 14778 | 19801130 | -Good- | 10.60 | 18.10 | 44.1 |

| 11 | 725140 | 14778 | 19801204 | -Good- | 11.00 | 15.00 | 34.9 |

| 11 | 725140 | 14778 | 19801221 | -Good- | 10.60 | 15.90 | 21.9 |

| 11 | 725144 | 99999 | 19800130 | -Good- | 11.00 | 15.90 | 28.2 |

| 11 | 725144 | 99999 | 19800311 | -Good- | 11.10 | 17.90 | 37.0 |

| 11 | 725144 | 99999 | 19800314 | -BigW- | 11.00 | 23.90 | 36.0 |

| 11 | 725144 | 99999 | 19800414 | -Good- | 10.80 | 14.00 | 48.9 |

| 11 | 725144 | 99999 | 19800428 | -Good- | 10.60 | 15.90 | 53.1 |

| 11 | 725144 | 99999 | 19800514 | -Good- | 11.10 | 15.90 | 71.1 |

| 11 | 725144 | 99999 | 19800609 | -Good- | 10.80 | 14.00 | 71.1 |

| 11 | 725144 | 99999 | 19800926 | -Good- | 11.20 | 17.90 | 69.1 |

| 11 | 725144 | 99999 | 19801025 | -BigW- | 10.70 | 23.00 | 55.9 |

| 11 | 725155 | 99999 | 19800717 | -BigW- | 10.50 | 48.00 | 82.0 |

| 11 | 725155 | 99999 | 19801013 | -Good- | 10.60 | 15.00 | 45.0 |

| 11 | 725155 | 99999 | 19801109 | -Good- | 11.40 | 15.00 | 50.0 |

| 11 | 725156 | 14748 | 19800111 | -Good- | 11.40 | 19.80 | 48.0 |

NOAA wind Data Tableau.public - data visual

URL of working Tableau.public graphic: : NOAA Wind Tableau Vis Link

Big Data - Hadoop on Amazon Customer Review - AWS

This is a second Big Data program or project that I created from the Amazon Customer Reviews Dataset offered under AWS. Please read the sub-page details link above for details. Again as above, some ideas were collected from the Sarfi -White book for processing.

Since I run my own Hadoop setup on my own hardware/cluster, ideas like this are easy to process at low cost. {FYI...If you are from a non-profit or have a big data issue for local club or beneficial cause, pls contact me. I am happy to try and process the data for finding/results. }

Problem Statement:

USE THE AWS DATA SETS FIND THE HIGHEST VOLUMENT ITEMS PURCHASED AND CAN THOSE TOTALS BE ROLLED INTO PARENT PRODUCT GROUPS (ie: Shoes vs. Shirts)

Solution Steps:

-

Look into the AWS sample data sets provided.

-

I choose not to review at the AWS sample code referenced, since I run Apache open source Hadoop on my own hardware.

- New challenge was data in is tab separated format, to reference correct Java to find how to read tsv files using standard libraries.

Note: See link above for solution results

Rebuilding Refurbished PCs/Portables

Initially, I was looking for a cheap source up PCs to support all my projects. I soon discovered that If I purchased used equipment on eBay and rebuild them this solved my needs. Thought experience, I found that off lease equipment are the best deals. Another key factor working in this space is to stick to sellers with good ratings.

Having a light hardware (HW) background as a past LAN Manager and a EE degree greatly assisted here. I found that working with hardware at times is fun, especially when it all works correctly (spinning fans and BIOS beeps) But, all the software in the world cannot solve a hardware issue. You can be assured to often you are not alone on HW complications, so looking up past problems or other past users issues does help.

Note: Quite often high end PCs desktop/portables would go for a fraction of there orginal cost.

Generally for the hardware point of view, there are few moving parts: PC Hard disks and cooling that are at risk of failing, the rest is all non-moving silicone.

Note: My view is that old dells are the most dependable. The Dell support site use to be great to locate and download old drivers. Now with a rebuilt site, it's hard to find old device drivers. {So Dell, I am not going to buy new HW no matter how hard you sell...}

-

Personal Standardized Refurbish steps

- Replace hard drives with new and add DIMMS/RAM.

- Make the new disk the main OS drive, keep the old disk for data storage

- Load Ubuntu as primary OS and then win as second (The boot loader grub2 is a hassle, but it works. Also, key is the Win OS needs to be installed first, separate disk)

- Used old coda keys on hardware case for Win licenses

Drupal Web site building

Another self-taught technology platform accomplished with use of the Safi books and Drupal.org this project was completed. I was working with a consulting group in the media industry, proposing solutions user this CMS platform. Back in 2009, the software market had multiple competing CMS platforms, thankfully with its open source architecture and teams of contributors the Drupal-CMS platform has come to dominate.

This site you are reading it built upon Drupal, proof of my full circle path here.

Initial Project Scope

- Read platform material and recreate book examples and build upon knowledge

- Use both base and advanced modules to link to Amazon and collect use feedback on products

- Create a customer data type to support resume job descriptions

- Build the initial site in local PC, then migrate to external hosting vendor

Initially the site was build and run under Drupal 6 for three years. For future support under D7, it was found my past hosting vendor was pulling away from Drupal and would provide limited support. So the next phase was to change hosting companies and upgrade to D7, both were accomplished. In addition under D7 the DRUSH tool was also activated and incorporated; it greatly benefited in the platform upgrade migration routines/process

Note: I was rather surprised at the erroneous task of version upgrading Drupal. Both key themes and modules used under D6 were discounted or phased out. I think some parties who support this platform are using this as a money pot by making new versions too complex. Sad that they are getting away from the simple approach that put this CMS on the map. It was a clean and simple CMS that worked, with an SQL backend to tweak.

Hosting change and D7 upgrade

- Review articles on migration process and past uer challenges

- Build a second development site under D7, key was to flip between version to research issues.

- Install DRUSH on local host host and begin to use tool's its update and migrate routines.

- Move the Devel site off to the new hosting vendor, overlay of files/folders with MYSQL restores.

- Change over to a themes that supported the custom data types created.

In the next phase of the site build out, I am looking to add additional Research and Personal sub-areas on site.

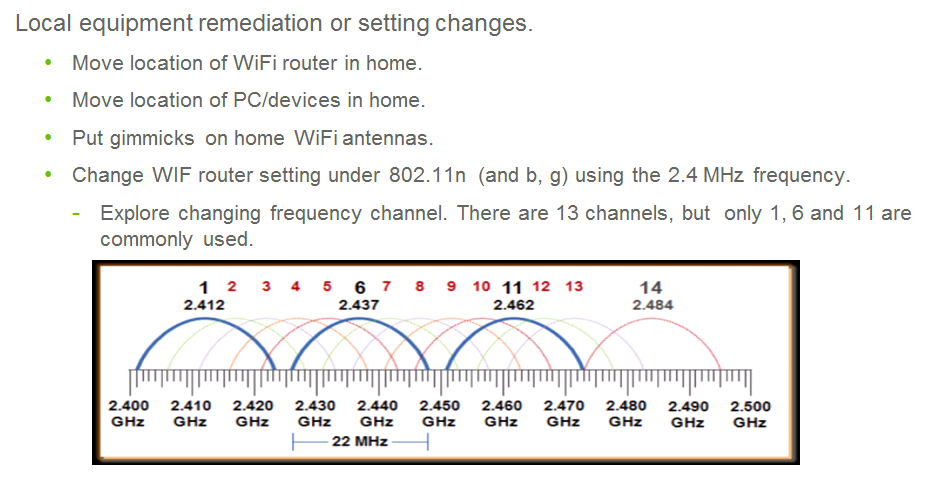

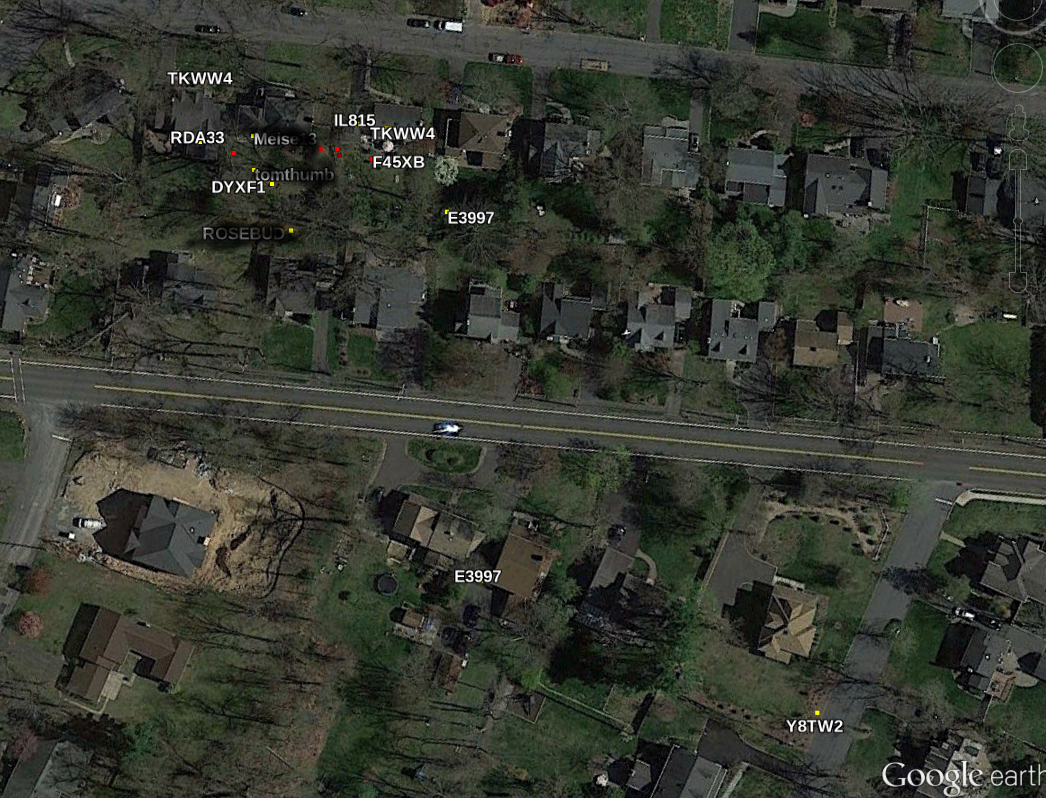

KISMET with Google Maps overlays (war Driving)

I was eager to explore the WIFI domain and the internet of things. Luckily, multiple tools existed for the Ubuntu OS on the area. The tool chosen was KISMET, but it has a steep learning curve with complex Linux settings and hardware settings. But, setup was accomplished, with better knowledge of hardware and distance limitations

Lesson or knowledge gained on local network

Using the WIFI tools and Results

Using the KISMET tools ans it UI can be overwhelming at first and shows multiple factors. All of the Cntrl-x style commands seem almost DOS like. Once the tool is able to get config file correct and reach the NIC card, it does work dependably. Like many Ubuntu community programs, it does take time to setup and fit into your configuration (ie: but cost is right, free).

On into the effort, I spent a few weeks upgrading my WIFI equipment. I found the popular TV Coax cable run thought my house could not be used for WIFI signals with good results. There is a thinner WIFI cox that needs to be used with its own unique connectors. This is a thin coax cable needs to be run through the house, lets say to reach an antenna up two floors. I think the signal quality on the cables has to do with wave frequency and the shielding depth of these different wires.

While the KISMET logs are extensive, a lot of low level packets details that are collected is in Hex/binary format. This is more that you need to see on some of the captures sessions logs. What was rewarding is creating a Google map extract file to see when the other networks were locate in my neighborhood. I had no interest in creating an reverse authentication WEP key to attached to other networks. I live in the area and not looking to alienate folks, in addition my WIFI work fine.

Google map overlay with KISMET Info

-

-------------------------------------------------